ClickHouse搭建

1.环境要求

系统必须是x86_64并且可以使用SSE 4.2 指令集。这是检查当前 CPU 是否支持 SSE 4.2 的命令:

grep -q sse4_2 /proc/cpuinfo && echo "SSE 4.2 supported" || echo "SSE 4.2 not supported"

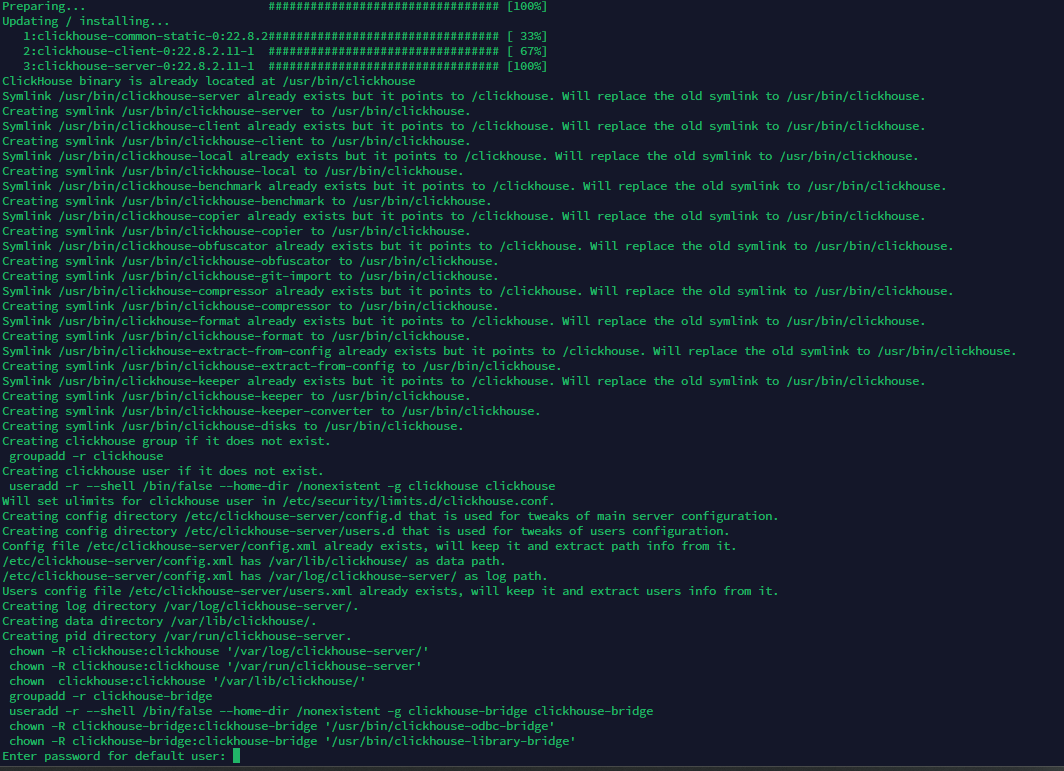

2.安装

从官网下载最新的RPM包分别是clickhouse-common-static-22.7.3.5.x86_64.rpm clickhouse-server-22.7.3.5.x86_64.rpm clickhouse-client-22.7.3.5.x86_64.rpm

执行命令

sudo rpm -ivh clickhouse-*rpm

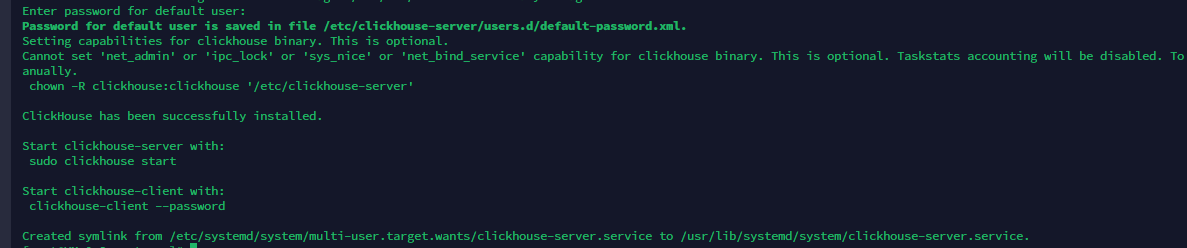

安装server时会让输入default用户密码

安装完毕

3.启动服务

# 启动服务

sudo systemctl start clickhouse-server.service

# 查看状态

sudo systemctl status clickhouse-server.service

4.连接

clickhouse-client --host="127.0.0.1" --port="9000" --user="default" --password='abc123456'

5.修改数据存储地址

涉及到的配置,配置文件默认路径在/etc/clickhouse-server/config.xml

<path>/data/clickhouse/</path>

<tmp_path>/data/clickhouse/tmp/</tmp_path>

<user_files_path>/data/clickhouse/user_files/</user_files_path>

<format_schema_path>/data/clickhouse/format_schemas/</format_schema_path>

<user_directories>

<users_xml>

<path>users.xml</path>

</users_xml>

<local_directory>

<path>/data/clickhouse/access/</path>

</local_directory>

</user_directories>

修改后重启服务即可

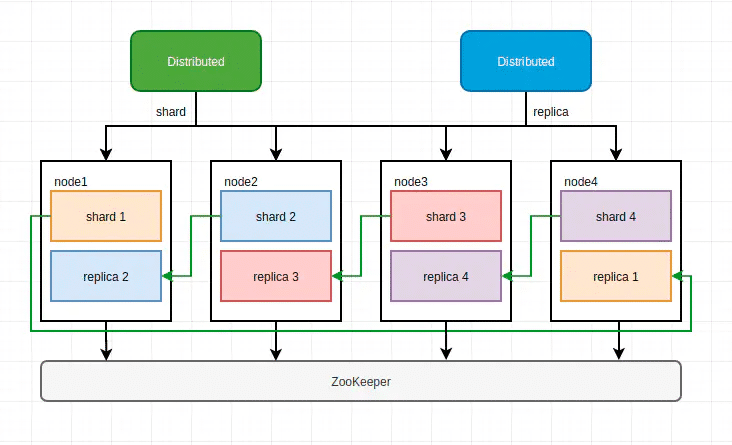

6.集群模式

集群模式需要安装zookeeper集群,由于ck对zookeeper集群依赖较大,建议单独安装,不要与其他服务共用。

公司服务器共5台,因此准备搭建5分片,5副本集群。参考

因单Node需运行两个ck进程,需要将配置文件,数据目录,日志目录,端口等分离,此处是配置文件示例

<?xml version="1.0"?>

<clickhouse>

<logger>

<level>information</level>

<log>/var/log/replica-clickhouse/replica-clickhouse.log</log>

<errorlog>/var/log/replica-clickhouse/replica-clickhouse.err.log</errorlog>

<size>1000M</size>

<count>10</count>

</logger>

<http_port>18123</http_port>

<tcp_port>19000</tcp_port>

<mysql_port>19004</mysql_port>

<postgresql_port>19005</postgresql_port>

<interserver_http_port>19009</interserver_http_port>

<listen_host>0.0.0.0</listen_host>

<zookeeper incl="zookeeper-servers" optional="true"/>

<macros incl="macros" optional="true"/>

<remote_servers incl="remote_servers" optional="true"/>

<include_from>/etc/clickhouse-config/replica/metrika.xml</include_from>

<max_connections>4096</max_connections>

<keep_alive_timeout>3</keep_alive_timeout>

<grpc>

<enable_ssl>false</enable_ssl>

<ssl_cert_file>/path/to/ssl_cert_file</ssl_cert_file>

<ssl_key_file>/path/to/ssl_key_file</ssl_key_file>

<ssl_require_client_auth>false</ssl_require_client_auth>

<ssl_ca_cert_file>/path/to/ssl_ca_cert_file</ssl_ca_cert_file>

<transport_compression_type>none</transport_compression_type>

<transport_compression_level>0</transport_compression_level>

<max_send_message_size>-1</max_send_message_size>

<max_receive_message_size>-1</max_receive_message_size>

<verbose_logs>false</verbose_logs>

</grpc>

<openSSL>

<server> <!-- Used for https server AND secure tcp port -->

<certificateFile>/etc/replica-clickhouse/server.crt</certificateFile>

<privateKeyFile>/etc/replica-clickhouse/server.key</privateKeyFile>

<verificationMode>none</verificationMode>

<loadDefaultCAFile>true</loadDefaultCAFile>

<cacheSessions>true</cacheSessions>

<disableProtocols>sslv2,sslv3</disableProtocols>

<preferServerCiphers>true</preferServerCiphers>

</server>

<client> <!-- Used for connecting to https dictionary source and secured Zookeeper communication -->

<loadDefaultCAFile>true</loadDefaultCAFile>

<cacheSessions>true</cacheSessions>

<disableProtocols>sslv2,sslv3</disableProtocols>

<preferServerCiphers>true</preferServerCiphers>

<!-- Use for self-signed: <verificationMode>none</verificationMode> -->

<invalidCertificateHandler>

<!-- Use for self-signed: <name>AcceptCertificateHandler</name> -->

<name>RejectCertificateHandler</name>

</invalidCertificateHandler>

</client>

</openSSL>

<max_concurrent_queries>100</max_concurrent_queries>

<max_server_memory_usage>0</max_server_memory_usage>

<max_thread_pool_size>10000</max_thread_pool_size>

<max_server_memory_usage_to_ram_ratio>0.9</max_server_memory_usage_to_ram_ratio>

<total_memory_profiler_step>4194304</total_memory_profiler_step>

<total_memory_tracker_sample_probability>0</total_memory_tracker_sample_probability>

<uncompressed_cache_size>8589934592</uncompressed_cache_size>

<mark_cache_size>5368709120</mark_cache_size>

<mmap_cache_size>1000</mmap_cache_size>

<compiled_expression_cache_size>134217728</compiled_expression_cache_size>

<compiled_expression_cache_elements_size>10000</compiled_expression_cache_elements_size>

<path>/data/replica/</path>

<tmp_path>/data/replica/tmp/</tmp_path>

<user_files_path>/data/replica/user_files/</user_files_path>

<ldap_servers>

</ldap_servers>

<user_directories>

<users_xml>

<path>users.xml</path>

</users_xml>

<local_directory>

<path>/data/replica/access/</path>

</local_directory>

</user_directories>

<default_profile>default</default_profile>

<default_database>default</default_database>

<mlock_executable>true</mlock_executable>

<remap_executable>false</remap_executable>

<builtin_dictionaries_reload_interval>3600</builtin_dictionaries_reload_interval>

<max_session_timeout>3600</max_session_timeout>

<default_session_timeout>60</default_session_timeout>

<prometheus>

<endpoint>/metrics</endpoint>

<port>19363</port>

<metrics>true</metrics>

<events>true</events>

<asynchronous_metrics>true</asynchronous_metrics>

<status_info>true</status_info>

</prometheus>

<query_log>

<database>system</database>

<table>query_log</table>

<partition_by>toYYYYMM(event_date)</partition_by>

<flush_interval_milliseconds>7500</flush_interval_milliseconds>

</query_log>

<trace_log>

<database>system</database>

<table>trace_log</table>

<partition_by>toYYYYMM(event_date)</partition_by>

<flush_interval_milliseconds>7500</flush_interval_milliseconds>

</trace_log>

<query_thread_log>

<database>system</database>

<table>query_thread_log</table>

<partition_by>toYYYYMM(event_date)</partition_by>

<flush_interval_milliseconds>7500</flush_interval_milliseconds>

</query_thread_log>

<query_views_log>

<database>system</database>

<table>query_views_log</table>

<partition_by>toYYYYMM(event_date)</partition_by>

<flush_interval_milliseconds>7500</flush_interval_milliseconds>

</query_views_log>

<part_log>

<database>system</database>

<table>part_log</table>

<partition_by>toYYYYMM(event_date)</partition_by>

<flush_interval_milliseconds>7500</flush_interval_milliseconds>

</part_log>

<metric_log>

<database>system</database>

<table>metric_log</table>

<flush_interval_milliseconds>7500</flush_interval_milliseconds>

<collect_interval_milliseconds>1000</collect_interval_milliseconds>

</metric_log>

<asynchronous_metric_log>

<database>system</database>

<table>asynchronous_metric_log</table>

<flush_interval_milliseconds>7000</flush_interval_milliseconds>

</asynchronous_metric_log>

<opentelemetry_span_log>

<engine>

engine MergeTree

partition by toYYYYMM(finish_date)

order by (finish_date, finish_time_us, trace_id)

</engine>

<database>system</database>

<table>opentelemetry_span_log</table>

<flush_interval_milliseconds>7500</flush_interval_milliseconds>

</opentelemetry_span_log>

<crash_log>

<database>system</database>

<table>crash_log</table>

<partition_by/>

<flush_interval_milliseconds>1000</flush_interval_milliseconds>

</crash_log>

<session_log>

<database>system</database>

<table>session_log</table>

<partition_by>toYYYYMM(event_date)</partition_by>

<flush_interval_milliseconds>7500</flush_interval_milliseconds>

</session_log>

<top_level_domains_lists>

</top_level_domains_lists>

<dictionaries_config>*_dictionary.xml</dictionaries_config>

<user_defined_executable_functions_config>*_function.xml</user_defined_executable_functions_config>

<encryption_codecs>

</encryption_codecs>

<distributed_ddl>

<path>/clickhouse/task_queue/ddl</path>

</distributed_ddl>

<graphite_rollup_example>

<pattern>

<regexp>click_cost</regexp>

<function>any</function>

<retention>

<age>0</age>

<precision>3600</precision>

</retention>

<retention>

<age>86400</age>

<precision>60</precision>

</retention>

</pattern>

<default>

<function>max</function>

<retention>

<age>0</age>

<precision>60</precision>

</retention>

<retention>

<age>3600</age>

<precision>300</precision>

</retention>

<retention>

<age>86400</age>

<precision>3600</precision>

</retention>

</default>

</graphite_rollup_example>

<format_schema_path>/data/replica/format_schemas/</format_schema_path>

<query_masking_rules>

<rule>

<name>hide encrypt/decrypt arguments</name>

<regexp>((?:aes_)?(?:encrypt|decrypt)(?:_mysql)?)\s*\(\s*(?:'(?:\\'|.)+'|.*?)\s*\)</regexp>

<replace>\1(???)</replace>

</rule>

</query_masking_rules>

<send_crash_reports>

<enabled>false</enabled>

<anonymize>false</anonymize>

<endpoint>https://6f33034cfe684dd7a3ab9875e57b1c8d@o388870.ingest.sentry.io/5226277</endpoint>

</send_crash_reports>

</clickhouse>

metrika.xml文件,该文件主要涉及分片与副本分配

<yandex>

<remote_servers>

<wxpt_cluster>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>10.172.134.200</host>

<port>9000</port>

<user>default</user>

<password>abc123456</password>

</replica>

<replica>

<host>10.172.134.201</host>

<port>19000</port>

<user>default</user>

<password>abc123456</password>

</replica>

</shard>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>10.172.134.201</host>

<port>9000</port>

<user>default</user>

<password>abc123456</password>

</replica>

<replica>

<host>10.172.134.202</host>

<port>19000</port>

<user>default</user>

<password>abc123456</password>

</replica>

</shard>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>10.172.134.202</host>

<port>9000</port>

<user>default</user>

<password>abc123456</password>

</replica>

<replica>

<host>10.172.134.203</host>

<port>19000</port>

<user>default</user>

<password>abc123456</password>

</replica>

</shard>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>10.172.134.203</host>

<port>9000</port>

<user>default</user>

<password>abc123456</password>

</replica>

<replica>

<host>10.172.134.204</host>

<port>19000</port>

<user>default</user>

<password>abc123456</password>

</replica>

</shard>

<shard>

<internal_replication>true</internal_replication>

<replica>

<host>10.172.134.204</host>

<port>9000</port>

<user>default</user>

<password>abc123456</password>

</replica>

<replica>

<host>10.172.134.200</host>

<port>19000</port>

<user>default</user>

<password>abc123456</password>

</replica>

</shard>

</wxpt_cluster>

</remote_servers>

<zookeeper-servers>

<node index="1">

<host>wxpt-ck-01</host>

<port>2181</port>

</node>

<node index="2">

<host>wxpt-ck-02</host>

<port>2181</port>

</node>

<node index="3">

<host>wxpt-ck-03</host>

<port>2181</port>

</node>

</zookeeper-servers>

<!--下面两个参数每个服务不相同-->

<!--第1个分片的第1个副本-->

<macros>

<shard>SHARD-01</shard>

<replica>REPLICA-01</replica>

</macros>

</yandex>

replica-clickhouse.server 配置,整体复制clickhouse-server.service,需修改配置文件路径与服务名称

sudo vim /lib/systemd/system/replica-clickhouse.service

# 输入下面

[Unit]

Description=ClickHouse Server (analytic DBMS for big data)

Requires=network-online.target

# NOTE: that After/Wants=time-sync.target is not enough, you need to ensure

# that the time was adjusted already, if you use systemd-timesyncd you are

# safe, but if you use ntp or some other daemon, you should configure it

# additionaly.

After=time-sync.target network-online.target

Wants=time-sync.target

[Service]

Type=simple

User=clickhouse

Group=clickhouse

Restart=always

RestartSec=30

RuntimeDirectory=replica-clickhouse

ExecStart=/usr/bin/clickhouse-server --config=/etc/clickhouse-config/replica/config.xml --pid-file=/run/replica-clickhouse/replica-clickhouse.pid

# Minus means that this file is optional.

EnvironmentFile=-/etc/default/clickhouse

LimitCORE=infinity

LimitNOFILE=500000

CapabilityBoundingSet=CAP_NET_ADMIN CAP_IPC_LOCK CAP_SYS_NICE CAP_NET_BIND_SERVICE

[Install]

# ClickHouse should not start from the rescue shell (rescue.target).

WantedBy=multi-user.target

配置完毕后,启动集群服务

# 重载服务

sudo systemctl daemon-reload

sudo systemctl restart clickhouse-server.service

sudo systemctl status clickhouse-server.service

sudo systemctl restart replica-clickhouse.service

sudo systemctl status replica-clickhouse.service

中途可能会遇到一些问题,大部分原因是由于配置文件配置错误导致,需结合错误日志文件进行处理。

Comments | NOTHING